Let's start with the question what is dbt?

“dbt is a transformation workflow that helps you get more

work done while producing higher quality results. You can use dbt to modularize

and centralize your analytics code, while also providing your data team with

guardrails typically found in software engineering workflows.” You can

read more at Whatis dbt? | dbt Developer Hub (getdbt.com)

There are couple of options to get started, one is pip with

virtual environment but I will use pip inside a docker container.

Let’s get started, first thing first start the docker

container with below command:

$ docker run -p 8888:8888 -p 8000:8080 -v D:/projects/rnd2/:/home/jovyan/work jupyter/minimal-notebook

In above command, I am exposing two ports 1) 8888 and 2)

8080. The first port will be used to connect to jupyter notebook/terminal and the other port will be used to expose the dbt documentation.

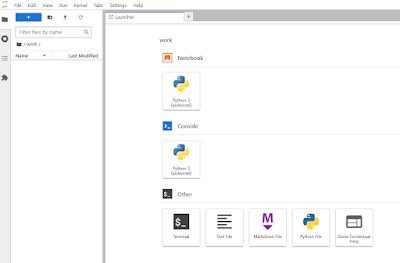

Access your juypter notebook server with http://localhost:8888/lab/tree/work

like shown below:

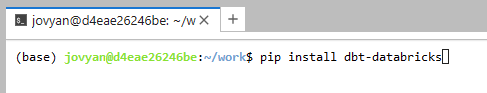

Click on the terminal and execute the pip statement:

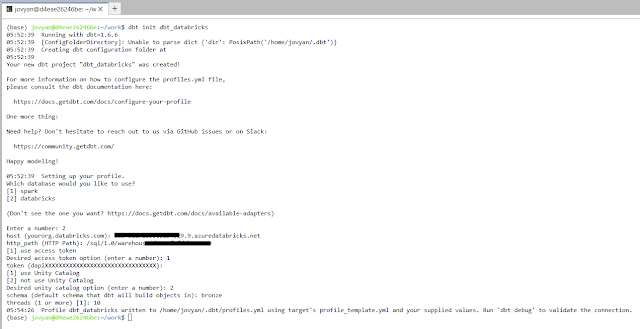

Once installed, execute the below command to create a dbt

project

Copy the /home/jovyan/.dbt/profiles.yml to your project

folder like below:

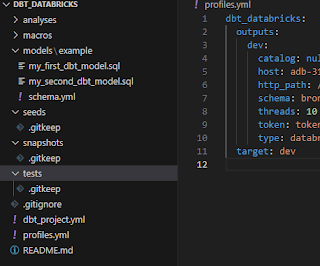

Now everything is done, let’s open the Visual Studio code

You can explore the generated codes to understand the structure and codes etc.

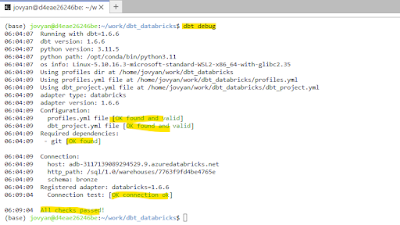

I have generated the databricks token and supplied to

profiles.yml file. Go to the terminal in your jupyter notbook server and

execute dbt commands.

The first command is the dbt debug, which validates the

configuration.

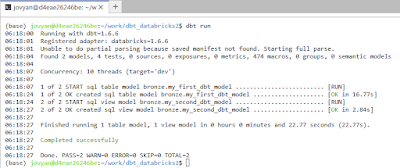

To apply your transformation, run dbt run command.

Points to remember:

Everytime you execute dbt run, a transformation is applied.

With this concept you integrate this with orchestration tool like Apache Airflow

or even with Databrick workflow or even with Docker container which will run

your dbt command and destroy itself.

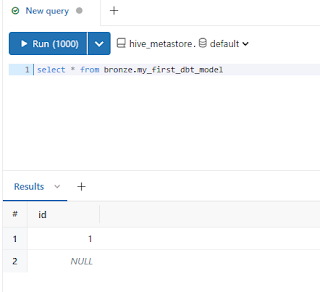

You can go in databricks to check the bronze schema which

will have 1 table loaded.

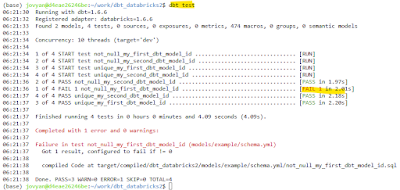

Lets run dbt test

As we know that first model contains 1 null record.

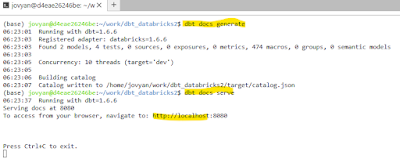

Lets look at documentation part, run dbt docs generate and

then serve. By default it exposes the documentation at port 8080 because we are

running this doc server inside a container and we already mapped our 8000 port

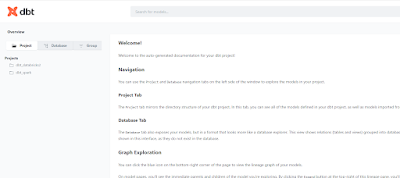

to 8080. So simply access localhost:8000 will take you to dbt generated

documentation

Navigate to http://localhost:8000

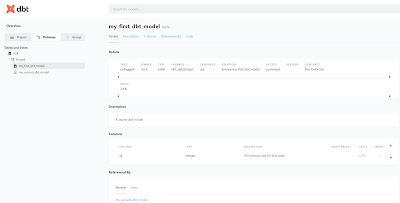

View lineage as well

That’s for now, next I will share how we can use dbt to

build data vault layer.