In this post I am going to show how we can easily integrate FxCop with Visual Studio 2010 so that you can check violation while you build your project.

There are two ways to achieve this goal.

1. Adding FxCop as external tool

2. Adding Post build script

or you can run FxCop.exe UI tool to check violations in your assembly.

I am assuming that you have installed FxCop 1.36 and downloaded SharePoint.FxCop.BestPractices.dll rules from http://sovfxcoprules.codeplex.com/

Adding FxCop as External Tool in VS

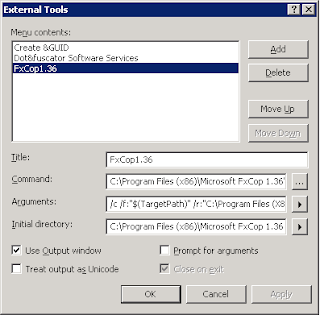

1. Open visual studio and go to Tools > External Tools

2. Click Add button and specify following:

Title: FxCop 1.36

Command: C:\Program Files (x86)\Microsoft FxCop 1.36\FxCopCmd.exe

Arguments: /c /f:"$(TargetPath)" /r:"C:\Program Files (X86)\Microsoft FxCop 1.36\Rules" /gac

Initial directory: C:\Program Files (x86)\Microsoft FxCop 1.36

Do mark "Use Output window" as shown in below image:

and click OK. You are done. :)

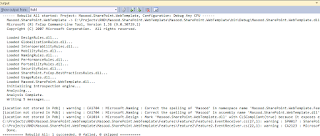

Build your project and call your new external tool you will see a similar output as shown in below:

Adding post build script

1. Add following script to post build script section:

"C:\Program Files (x86)\Microsoft FxCop 1.36\FxCopCmd.exe" /c /file:"$(TargetPath)" /rule:"C:\Program Files (X86)\Microsoft FxCop 1.36\Rules" /gac

2.Add you desired rule assembly in "C:\Program Files (X86)\Microsoft FxCop 1.36\Rules" folder or you can specify your assembly folder via /rule:"Path to your folder"

3. If your assembly depends on other assembly which could be located at GAC or local file system, you can specify via /directory:"Path to your dependency assembly folder" or /searchgac or /gac

4. If you want to run FxCop in multiple .dlls you can specify multiple switch like /file:"Path to your .dll file" /file:"Path to another dll" or /file:"Path to a folder where mutliple dll resides"

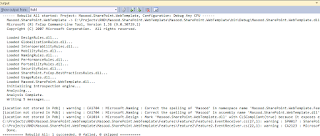

Build your project and you can check all your violations in output window like below:

In next post I will be explaining how we can integrate FxCop with build pipeline.